ss_item_sk has 56x more unique values than ss_hdemo_sk. ss_item_sk runs in 85s versus 105s for ss_hdemo_sk. A cluster key in Snowflake presorts the result, e.g. The query and CPU time increases for queries where the column is not part of a cluster key.For ss_customer_sk where we have 65M unique values the query spends 27% of time on CPU processing. The query and CPU time increases for queries where the column cardinality is high (more unique values), e.g.

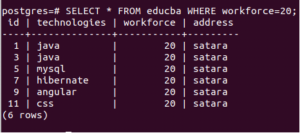

SELECT DISTINCT & lt column_name & gt from "SAMPLE_DATA". In this scenario we select the unique number of various attributes from the STORE_SALES table Let’s go through some examples to illustrate this We can traverse the input and store the unique values in a hash table and then use a lookup. Another efficient algorithm would be to use hashing.We can then traverse the sorted input to identify each unique element. First sort the input so that all occurrences of every element are ordered. We can use sorting to make the algorithm more efficient.If it hasn’t then we add the value to the unique list. For every element, it checks if it has appeared before. A simple algorithm runs two nested loops.Under the hood the database and the Cost Based Optimizer use various algorithms to identify a unique set of values in a list. In other words, time spent on data processing by the CPU.Īs you can see DISTINCT is an expensive CPU (and also memory) intensive operation. It returned ~96GB and it spent 27% of its time on Processing. The query returns 65M unique customers who made a purchase We ran the query with an M virtual warehouse. Note: This is a table from the 10 TB TPC-DS sample data that ships with Snowflake. SELECT DISTINCT SS_CUSTOMER_SK from "SAMPLE_DATA".

0 kommentar(er)

0 kommentar(er)